RSS Feed Generator, Create RSS feeds from URL

RSS Feed IntegrationsMake your RSS feed work better by integrating with your favorite platforms. Save time by connecting your tools…

RSS Feed IntegrationsMake your RSS feed work better by integrating with your favorite platforms. Save time by connecting your tools…

The 2024 EchoPark Automotive 400 takes place on Sunday at 3 p.m. ET from Texas Motor Speedway. Kyle Larson is…

(NerdWallet) – Traveling means confronting dozens of problems that our seemingly advanced civilization should have solved by now. Are those…

"The models and data are telling one remarkably consistent story."Small BangScientists seem to have figured out why the Moon is…

'The Talk' will end in December 2024.The long-running TV talk-show has been renewed for a 15th and final season, with…

As if scammers couldn't sink any lower, there's a new online scam taking advantage of grieving people. It's a strange pirate…

(Reuters) - Canada has warned citizens to avoid all travel to Israel, Gaza and the West Bank, upgrading its risk…

To the editor:All levels of government have a responsibility to protect our environment. Elected officials become the public’s trustees with…

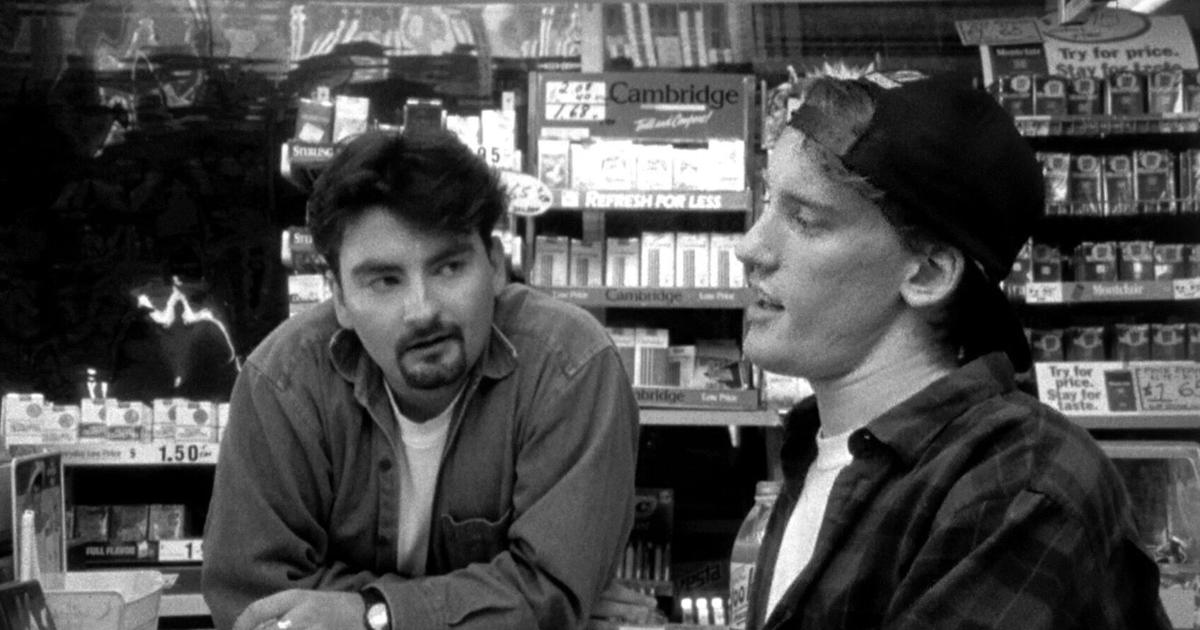

This weekend kicks off the Nevada City Film Festival’s annual Comedy Nights, April 12-14, at the historic Nevada Theatre in…

By now, you've probably heard the buzz surrounding Amazon's live-action Fallout show. And as it turns out, it is a…

© 2022 The News Dairy | Design by AmpleThemes

| Cookie | Duration | Description |

|---|---|---|

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |